On January 17, 2019, the Data Management Policy was passed unanimously by the Committee on Information Technology (COIT), San Francisco’s IT governance body. The policy was crafted with departments to define and make clearer what it means to manage data as a strategic asset.

At a basic level, it establishes that data should be tracked and understood similar to the way we track our physical assets like buildings, parks or ambulances (read Andrew Nicklin’s excellent synopsis of why this is so important). While the policy builds on the practices the City has been doing for years, such as the inventorying of data and databases. It also corrals other related policies into a digestible form; providing guidance on how best to put our data assets to work to realize the full potential of the City’s data.

Data is an asset

Managed well, data can be useful in everything from service delivery (helping people get housing) to program management (understanding how many people are getting housing) to policy making (deciding on how to improve housing access). The five sections of the policy work together, reinforcing other policies and continuing to move the City toward maximizing data’s value.

To manage data as an asset, the policy covers:

- The inventorying and classification of data in database and dataset inventories (Section 1)

- Processes and policies for appropriately sharing open and confidential data (Sections 2 and 3)

- An approach for identifying and actively managing interdepartmental data and data standards (Section 4)

- The roles and responsibilities of those involved in data management (Section 5)

A modular policy

This policy also threads together other policies and standards from disaster preparedness and recovery to data classification. It places these things in context and acts as an aggregator and synthesizer of these deeper policies.

We imagine that we will need to flex and change the policy as the world changes. COIT has standardized on an annual review of all policies to make sure they stay relevant which will allow the Data Management Policy to adapt as needed.

Pushing into new territory

While much of the policy is about codifying and clarifying existing practices, it gently pushes into new territory to modernize data sharing and integration.

We know as a city, current forms of data sharing and integration across data systems are administratively burdensome. We also know that there will always be multiple systems to meet all the needs of the City.

The City’s challenge of sharing and integrating across systems is not unlike the private sector where many teams build according to a business need with different tools across different systems. However, in the private sector, modern service architectures and application programming interfaces (APIs) ease data exchange challenges by shifting away from ad hoc approaches to something more systematic and visible.

Think of an API as a “contract” defining the exchange of data for others to understand and use when building something that depends on that data. For example, a website where you can apply for a permit and check its status may be built using APIs. A well-documented and supported API can make the integration easier for developers or analysts in the City (not unlike what we’ve seen with Departments using DataSF’s open data APIs for automated dashboarding).

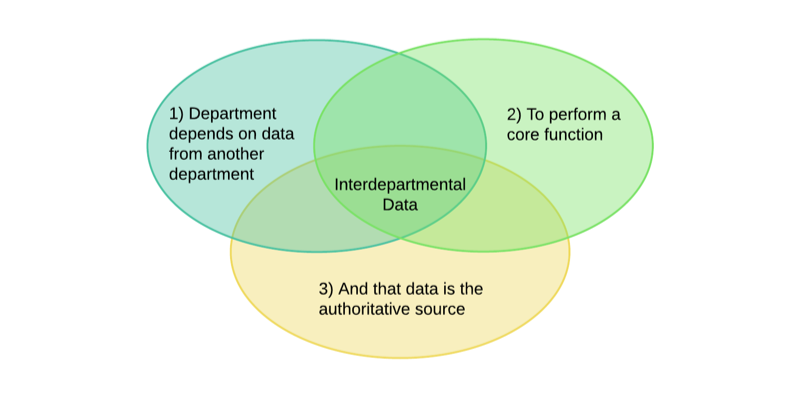

Applying an “API-centric” or data service approach to all data across the City overnight is not feasible or even appropriate. That’s why Section IV of the policy starts with a definition of interdepartmental data, which is data that:

- At least one department depends on from another department

- To perform a core function

- And is the authoritative source

The policy then:

- Compels the City to identify data according to that definition and strategically prioritize that data for sharing

- Defines a minimum standard of what good looks like for managing this data as a service (in the Policy Appendix)

This section lays the ground for understanding where we are now so that the City can prioritize modernization efforts around strategic value, gently pushing the bar up.

Thanks to a great team

Policy is a team sport. The team was made up of stakeholders from across the City. Special thanks goes to department representatives in the working group including former DataSF staff, the COIT Architecture Review Board subcommittee, the COIT committee, and our Citywide CIO.

While there are more games to play in the season, we think the City team did a great job delivering a win for data! And if you haven’t yet, check out the full text of the policy.