This is the 1st of a 4 part series on managing data science projects in government.

- Part 1: How to solicit and select data science projects

- Part 2: How to scope data science projects

- Part 3: How to deliver a data science project

- Part 4: How to tell your data science story

If you are starting a data science service in your jurisdiction, your first task will be to develop a backlog of projects. This article walks through how we solicit and select data science projects.

How to solicit data science projects

If you’re starting a data science service, you may have a list of data science projects handed to you. However, once you work through those or if you’re starting from scratch, you’ll need to identify new project ideas. At a minimum, you’ll probably hold brown bags, office hours and brainstorming sessions. Below are some additional ideas.

Try using an application process to solicit projects

The biggest barrier to data science projects is identifying questions amenable to data science that people care about. An application process – where clients have to apply to use your data science service – solves this barrier because:

- It surfaces committed clients with a priority project and an eager ear; and

- The solicitation process helps define what is a good data science project.

An application process relies on you having an existing reputation and means of reaching clients (listservs, newsletters, etc). If you don’t have this, make sure that offering data science services is actually what your jurisdiction needs.

Define and differentiate your service

Not everyone will know about data science. Or you may have existing analysts who want to understand how your service is different than their work. So, you’ll need to define what you are “selling” and, more importantly, what you are not selling.

We recommend listing current analytic resources that are available and then showing how your service is different. It is easier to define (and explain) a new, unknown service in relation to existing, well-known services. Failure to do this may result in numerous requests for dashboards!

Define the expected output

At DataSF, we insist that all projects have “service change” as a primary goal. This means clients will need to actually change how they do business. DataSF will deliver tools, decision aids and models, but never white papers or research briefs. You may take a different approach – that’s fine. Just state it clearly and consistently to help manage expectations.

Speak their language and use lots of examples

All the operational smarts needed to identify good data science projects exist in departments. You have to help these subject matter experts look at their business processes like a data scientist, but do so without talking in statistical terms. Remember the goal is to generate applications, not impart the finer workings of machine learning.

Richard Todd and Oliver Wise, formerly of NOLAlytics, developed a thoughtful approach. Their analytics typology uses a set of “recipes” that speak to common business problems. DataSF refined these typologies and created infographics and other visual collateral to help explain the project types.

Use a short application form to increase quantity and quality

If you do use an application process, keep it short to increase the number of submissions. At the same time, filling out 100-200 word descriptions of the following acts as a filter for uncommitted projects:

- What the problem is

- Why is it important

- What would be the service change

Application forms of any length fail to solicit all the information we need to evaluate a project. It is best used as a tool to identify questions for a 30-minute follow-up meeting.

How to select projects

Once you receive your applications, we recommend having a follow up conversation with each one to make sure you understand the submission in order to properly evaluate it.

Screen projects against your criteria

Once we understand the project, we screen it against our selection criteria (which was communicated throughout the solicitation process):

- Answerable or potentially answerable by data science

- Level of impact

- Alignment with mayoral priorities

- Viable path towards service change

- Appropriate project champion

- Solvable within the cohort timeframe

This screening helps you identify which of the submissions is a viable project.

Score viable projects on complexity and value

Once you’ve screened the projects, you’ll want to score the viable ones. You have limited capacity, which means you are going have to make some hard choices between good projects. Even if you take on all the projects, scoring will help you plan timing and sequencing.

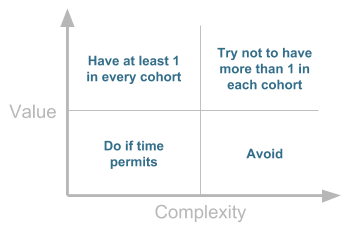

As a team, DataSF discusses and places each project on a 2×2 that plots projects by value and complexity. This helps us identify which combination of complex and simple projects can be accomplished in the cohort timeframe. It also helps us identify projects that we should avoid – mainly those that are high on complexity and low on value.

Scoring projects using this 2×2 matrix helps ensure we have a mix of small, medium and large projects, all of medium to high value.

Provide gentle off ramps

Remember the user. At this point, departments have dedicated time to submitting their idea and some have even met with you to discuss their application in detail. In the relationship-heavy world you operate in, an impersonal “thanks but no thanks” email is not going to cut it.

You want to leave a positive impression that will encourage future submissions. At DataSF, we employ ‘gentle off ramps.’ If a project is not ripe for data science, we try to identify another option from our (or a partner’s) suite of services. This is often our automation services via the open data portal, attending a data academy class or dashboarding help. It doesn’t matter that some don’t follow up — what’s important is that we offered.